🎹 Zero-Shot CoT Prompting

Abstract

This section covers "Zero Shot CoT Prompting".

🦜 Video lecture for this chapter - Link

💢 Overview

Zero-shot Chain of Thought prompting (ZS CoT) offers a simplified approach to enhance LLM reasoning abilities. Unlike CoT prompting which provides examples with reasoning steps, ZS CoT prompting just adds the phrase "Let's think step by step." This additional phrase encourages the LLM to generate its own chain of thought, leading to enhanced reasoning.

While sacrificing some control and transparency compared to few-shot CoT, Zero-shot-CoT offers an accessible and effective way to advance the reasoning capabilities of LLMs across various domains. Importantly, Zero-shot-CoT is versatile and task-agnostic.

💢 How it works

-

Basic prompt- Instead of providing examples with detailed reasoning steps, ZS CoT simply adds the magic phrase "Let's think step by step" to the original prompt. -

Internal chain generation- The phrase “Let's think step by step” does the magic. The LLM then generates its own reasoning steps based on its understanding of the prompt and then generates the answer.

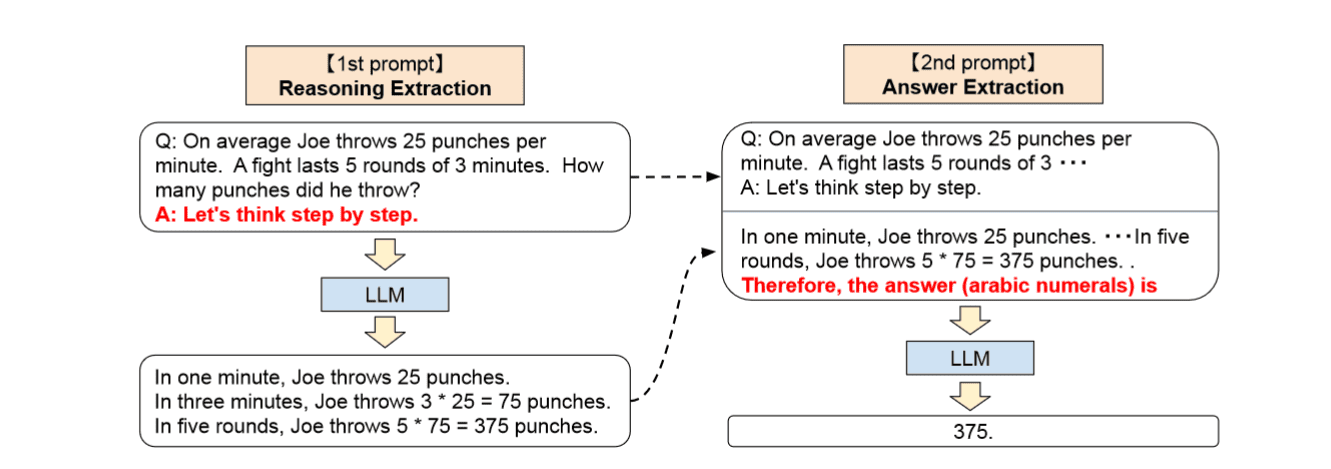

As shown in the above figure, ZS CoT involves two steps “reasoning extraction” and “answer extraction”. In the first first, the model generates the reasoning steps based on the original prompt and the magic phrase. In the second step, the model generates the answer based on the original prompt, magic phrase and the generated reasoning steps.

💢 Pros

Ease of use- No need for examples, just append the magic phrase “Let's think step by step”.Flexibility- Similar to the original CoT, Zero-shot-CoT can be applied to various tasks and domainsEnhanced Performance- Boosts LLM reasoning on diverse tasks.Increased Transparency- Makes answers more transparent.

💢 Cons

Performance might be lower- Less effective than few-shot CoT with examples.Less control- Users have less control over the LLM's internal thought process because of lack of reasoning steps in the prompt.

To summarize, ZS CoT prompting doesn’t require examples like few-shot CoT and instructs LLMs to solve the problem step by step just by including the phrase “Let's think step by step. Zero-shot-CoT presents a convenient way to leverage the benefits of CoT prompting.