🧱 LLM Introduction

Abstract

This section covers "Large Language Models" (LLMs) briefly.

Chatbots like ChatGPT and Bard are one of the main reasons behind the huge popularity of Generative AI in recent times. These chatbots are built on top of advanced deep learning models called large language models.

Large language models (LLMs) are a special class of pretrained language models obtained by scaling model size, pretraining corpus and computation. LLMs, because of their large size and pretraining on large volumes of text data, exhibit special abilities which allow them to achieve remarkable performances without any task-specific training in many of the natural language processing tasks.

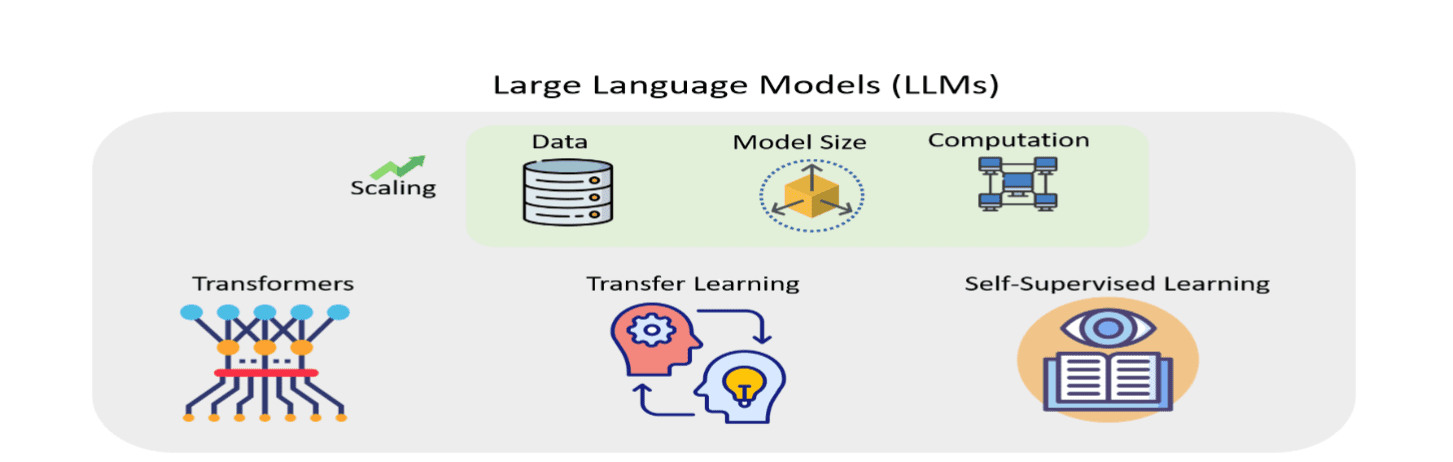

The era of LLMs started with OpenAI’s GPT-3 model, and the popularity of LLMs has increased exponentially after the introduction of models like GPT-3, GPT-3.5 and GPT4. As shown in the figure, LLMs are built on the top of transformer, an advanced deep learning model and concepts like transfer learning and self-supervised learning.

TransformerBefore the transformer model, NLP research focused on traditional deep learning models like MLP, CNN, RNN, LSTM, GRU, and encoder-decoders. The transformer model emerged as an effective alternative, addressing issues like vanishing gradients and handling long-term contexts. Because of novel architecture and self-attention mechanism, the transformer model quickly gained popularity and has become the first choice for building pretrained language models and large language models.

Transfer LearningTransfer Learning in the context of artificial intelligence involves existing knowledge transfer from one task (or domain) to another different but related task (or domain). Transfer learning avoids training a model from scratch and helps improve the model’s performance on the target task (or domain) by leveraging already existing knowledge.

Self-Supervised LearningSelf-supervised learning, a promising learning paradigm in artificial intelligence, helps models from different modalities like language, speech or image to learn background knowledge from large volumes of unlabeled data. Unlike supervised learning, which relies on large volumes of labelled data, self-supervised learning pretrains the models at scale based on the pseudo-supervision offered by one or more pretraining tasks. Here, the pseudo supervision stems from the labels, which are automatically generated without human intervention based on the description of the pretraining task.

Scaling (Data, Model Size & Computation)-

-

The pretrained language models, starting from GPT-1, BERT models to the latest DeBERTa, achieved significant progress and also reduced the amount of labelled data required to train the task-specific models. Pretrained language models follow the paradigm “pretrain then fine-tune”, i.e., the model is pretrained first and then adapted to downstream tasks by fine-tuning. Pretrained language models cannot generalize to unseen downstream tasks without task-specific fine-tuning and hence these models are treated as narrow AI systems.

-

The research community's main focus is on developing artificial general intelligence (AGI) systems capable of general problem-solving. Researchers found that pretrained language models' performance can be improved through scaling along three dimensions: pretraining computation, pretraining data, and model size.

-

Larger models can capture more nuanced language patterns, enhancing text understanding and generation. Scaling, enabled by transfer learning and self-supervised learning, led to the creation of GPT-3. GPT-3 and its successor models are referred to as "large language models" to distinguish them from pretrained language models.